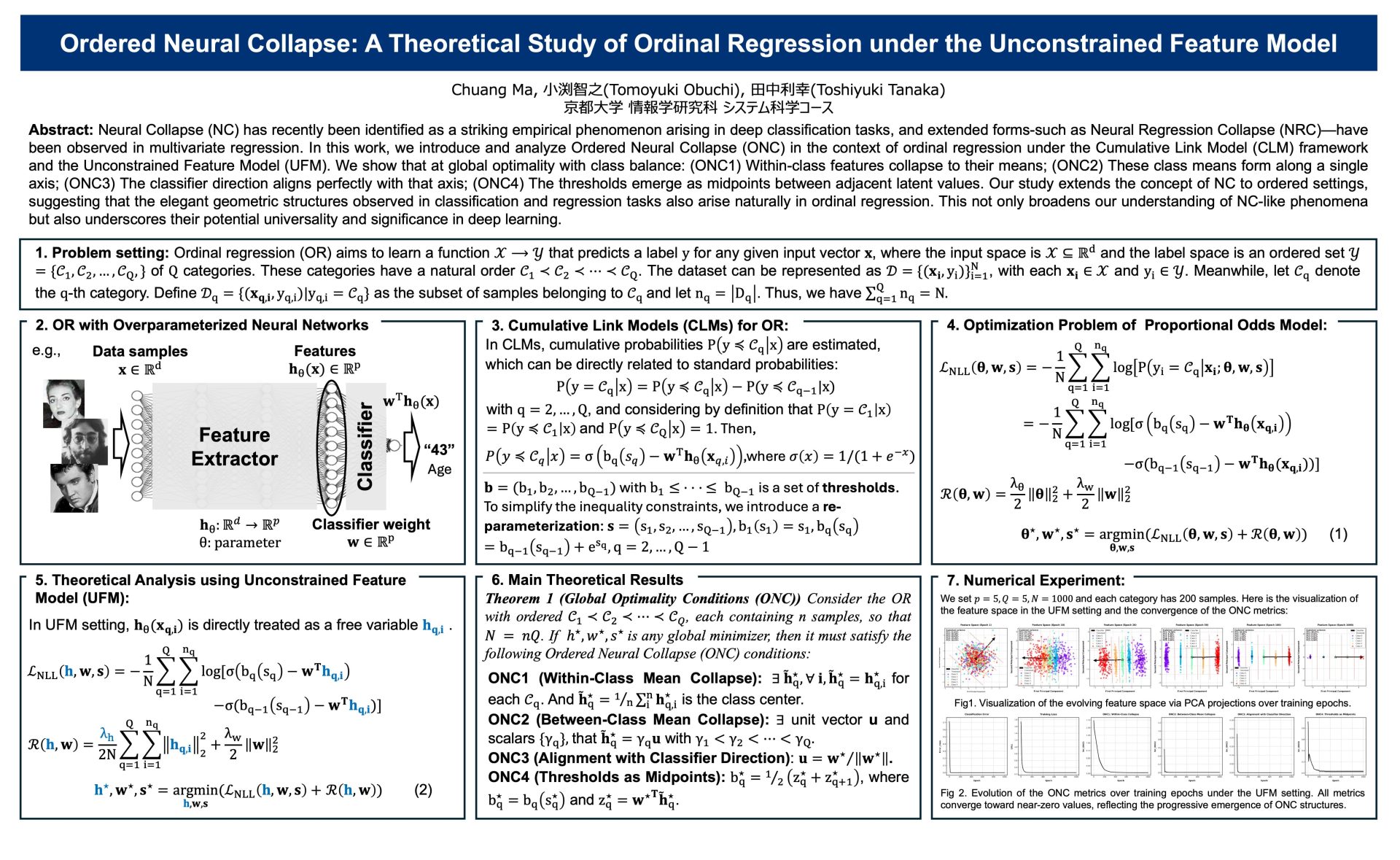

Neural Collapse (NC) has recently been identified as a striking empirical phenomenon arising in deep classification tasks, and extended forms-such as Neural Regression Collapse (NRC)—have been observed in multivariate regression. In this work, we introduce and analyze Ordered Neural Collapse (ONC) in the context of ordinal regression under the Cumulative Link Model (CLM) framework and the Unconstrained Feature Model (UFM). We show that at global optimality with class balance: (ONC1) Within-class features collapse to their means; (ONC2) These class means form along a single axis; (ONC3) The classifier direction aligns perfectly with that axis; (ONC4) The thresholds emerge as midpoints between adjacent latent values. Our study extends the concept of NC to ordered settings, suggesting that the elegant geometric structures observed in classification and regression tasks also arise naturally in ordinal regression. This not only broadens our understanding of NC-like phenomena but also underscores their potential universality and significance in deep learning.

Facial age estimation, Ordinal Regression, Interpretability, Neural Network Optimization, etc.

| 氏名 | コース | 研究室 | 役職/学年 |

|---|---|---|---|

| Chuang Ma | システム科学コース | 情報数理システム分野 | 博士1回生 |

| 小渕智之(Tomoyuki Obuchi) | システム科学コース | 情報数理システム分野 | 准教授 |

| 田中利幸(Toshiyuki Tanaka) | システム科学コース | 情報数理システム分野 | 教授 |