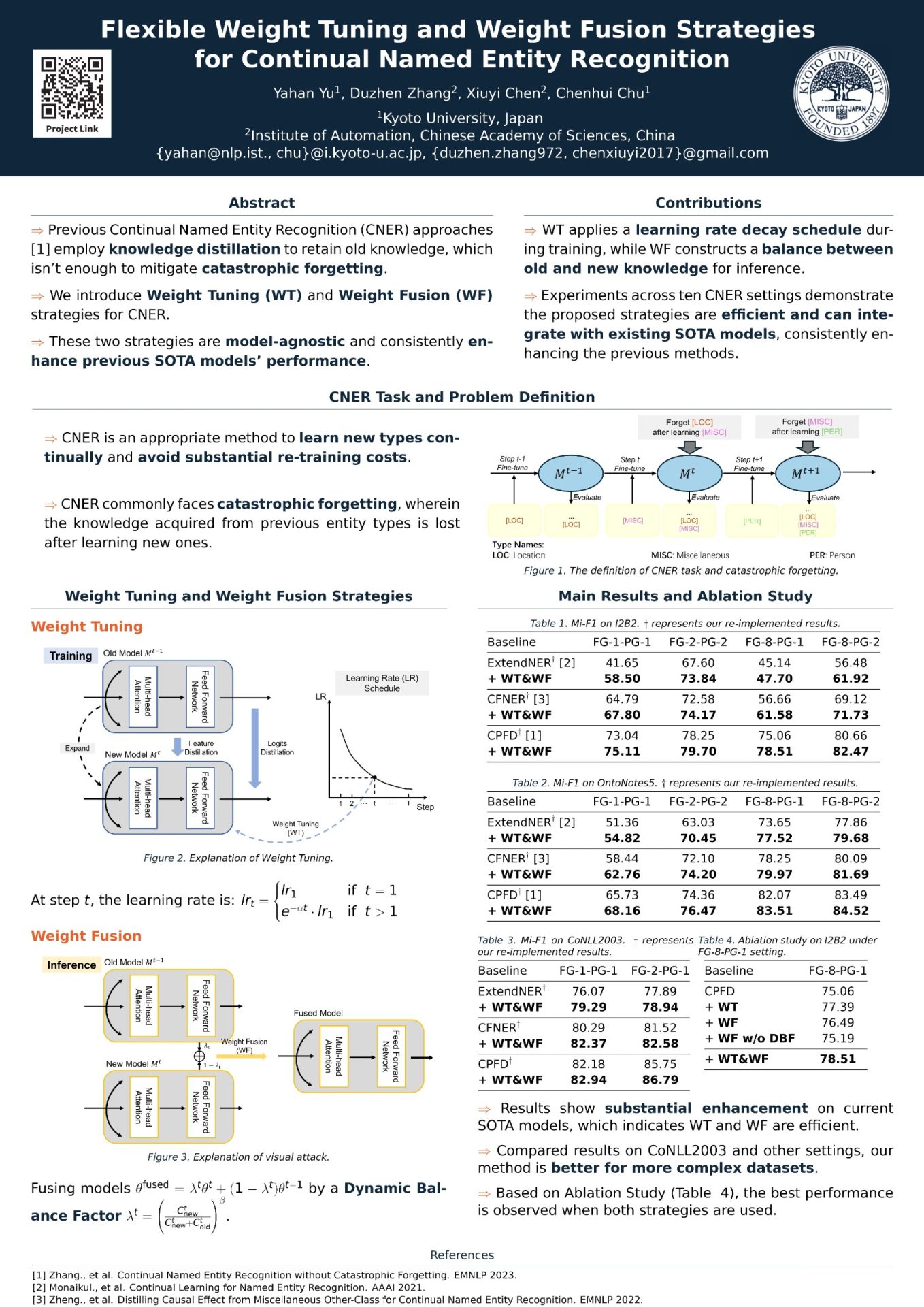

Continual Named Entity Recognition (CNER) is dedicated to sequentially learning new entity types while mitigating catastrophic forgetting of old entity types. Traditional CNER approaches commonly employ knowledge distillation to retain old knowledge within the current model. However, because only the representations of old and new models are constrained to be consistent, the reliance solely on distillation in existing methods still suffers from catastrophic forgetting. To further alleviate the forgetting issue of old entity types, this paper introduces flexible Weight Tuning (WT) and Weight Fusion (WF) strategies for CNER. The WT strategy, applied at each training step, employs a learning rate schedule on the parameters of the current model. After learning the current task, the WF strategy dynamically integrates knowledge from both the current and previous

models for inference. Notably, these two strategies are model-agnostic and seamlessly integrate with existing State-Of-The-Art (SOTA) models. Extensive experiments demonstrate that the WT and WF strategies consistently enhance the performance of previous SOTA methods across ten CNER settings in three datasets.

This approach is suitable for applications where domain-specific terminology changes, such as financial analysis, healthcare, e-commerce, and social media monitoring. By continuously learning from new data, the system can maintain high accuracy and ensure relevance in real-time applications. This capability is essential for organizations aiming to gain actionable insights from large-scale, rapidly changing datasets.

| 氏名 | コース | 研究室 | 役職/学年 |

|---|---|---|---|

| 于 雅涵 (YU, YAHAN) | 知能情報学コース | 言語メディア研究室 | 博士2回生 |