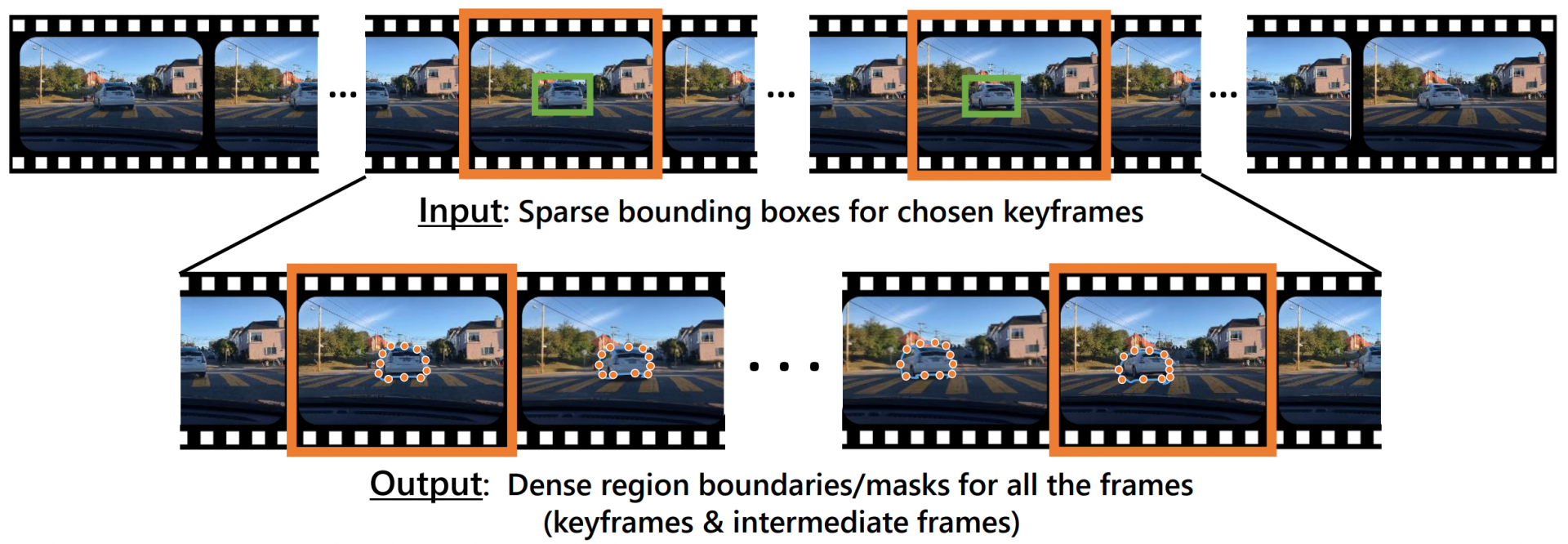

We introduce a novel dense video annotation method that only requires sparse bounding box supervision. We fit an iteratively deforming volumetric graph to the video sub-sequence bounded by two chosen keyframes, so that its uniformly initialized graph nodes gradually move to the key points on the sequence of region boundaries. The model consists of a set of deep neural networks, including normal convolutional networks for frame-wise feature map extraction and a volumetric graph convolutional network for iterative boundary point finding. By propagating and integrating node-associated information (sampled from feature maps) over graph edges, a content-agnostic prediction model is learned for estimating graph node location shifts. The effectiveness and superiority of the proposed model and its major components are demonstrated on two latest public datasets: a large synthetic dataset Synthia and a real dataset named KITTI-MOTS capturing natural driving scenes. This paper won the best student paper in BMVC2020.

Driven by many real applications, video-based vision tasks attract more and more attention. It leads to high demand for detailed and densely-annotated video data. However, manually annotating such labels for video frames is a highly time-consuming and boring task. Our model and future related works could accelerate such an annotation procedure.

| 氏名 | 専攻 | 研究室 | 役職/学年 |

|---|---|---|---|

| 許 煜正 | 知能情報学専攻 | コンピュータビジョン | 博士2回生 |

| 西野 恒 | 知能情報学専攻 | コンピュータビジョン | 教授 |

| 延原 章平 | 知能情報学専攻 | コンピュータビジョン | 准教授 |

| 伍 洋 | 知能情報学専攻 | コンピュータビジョン | 特定講師 |

| Nur Sabrina binti Zuraimi | 知能情報学専攻 | コンピュータビジョン | その他学生 |